Folks, you probably saw the announcement, the new distribution came out of IGTF Sunday.

Overall the release is not urgent. I meant to send out an evaluation earlier but ran out of time.

--jens

Annotated changes:

* Added accredited classic Indian Grid CA (IGCA) (hash da75f6a8) (IN)

Good for those folks over in Cambridge working on EUIndiaGrid. INFN

no longer has to be the catch all.

* Updated IUCC root certificate with extended life time (IL)

Israel: they recently went through a self audit and I need to review this audit, I've been remiss (I was assigned as a reviewer). They re-signed their certificates to extend the lifetime (previously till summer 09) and fix some bugs. It is still the same public key but the fingerprint will have changed. Unfortunately they introduced some more minor problems...

* Updated BEGrid (web, CRL) and UCSD-PRAGMA (web) URL metadata (BE, AP)

These are just the .info files.

* New BEGrid2008 root certificate (transitional) (BE)

Old one due to expire end of Feb 09, and it's still in there. Belgium may call the new one transitional(?) but its lifetime is 10 years.

Now here's the rub. If the old one expires in Feb, and the new one is created just now, what signed the existing valid certificates?

* Extended life time of the SEE-GRID CA (SEE)

Again using the same key as before, the lifetime of the existing certificates has been extended from expiring August 09 to August 2014. This is normally "harmless" except for the Mozilla NSS bug where a browser gets confused if it sees a different version of the "same" certificate at the remote end. They did in fact keep the same serial number, so they will get this problem. (Sometimes you can change the serial, sometimes not - another long story.)

"SEE" by the way is South Eastern Europe. Another EU thing. (www.see-grid.org, FP6 funded project)

* Included CRL for NCSA SLCS CA (US)

This was subject of a (yet another) long discussion. (In the world of grid certificates, long and heated is the norm for a discussion.)

The simple version is that SLCS is Short Lived Credential Service. They should not need to issue CRLs, since short lived credentials by definition live short lengths of time. However, much of the middleware tends to work better if it can read CRLs, so even an empty CRL keeps it happy. FNAL have done this for a while too.

There has been more discussion regarding the need to revoke even short lived credentials, but in general that is not yet feasible.

* Temporally suspended NGO-Netrust CA (SG)

They were suspended because of an issue with the CRL lifetime. They have a very short CRL (1 day) which can cause grid stuff to "break" because an "expired" CRL blocks everything. Another long technical discussion.

They also have some weird stuff in their certificate, like private key usage period. Sometimes the need to bring in commercial or gov't CAs, non-Grid CAs, makes it necessary for us to accept strange things in certs - but then those strange things can go on to break stuff on the grid because the grid is special.

* Withdrawn expired old PK-Grid CA (d2a353a5, superseded by f5ead794) (PK)

This is completely safe.

* Experimentally added Texas Advanced Computer Center TACC Root,

Classic, and MICS CAs to the experimental area (US)

This is interesting, I was a reviewer on the root (indeed I wrote the first set of guidelines for reviewing a root that doesn't issue end entity certs - still mean to finish that and push it through OGF.)

A "MICS" is Member Integrated Credential Service or something to that effect. The basic idea is it's fairly closely tied to a carefully maintained site database. Of course this sort of thing won't work in general out in the grid world when you have one CA per site database (again a long story), but TACC do need this sort of thing. Note it's experimental, so it's not accredited.

Eventually TACC should have two CA certs, one being a Classic and one a MICS, both tied under the same root.

Thursday 18 December 2008

Wednesday 10 December 2008

Winning hearts and minds?

(Not really operations but this is my least-inappropriate gridpp blog for this...)

I was invited by Lambeth City Learning Centre (www.lambethclc.org.uk) to give a talk about "computing and science" to schoolchildren. They were 14 year olds, it's the first time I've given talks to children.

My first main point was that increases in computing performance enables researchers in every single field of research to do more, particularly in a world where now everybody needs to collaborate. I used the grids as an example, talking about GridPP and NGS. Only one of them had heard of LHC (or would admit to it).

I used games as an analogy - they all knew those. Games visualise a world which lives inside the machine, they "collaborate" by playing with others.

The other main point I made was that they need to think. The computer is stupid, it doesn't know that it's flying a concorde, calculating the length of a bridge, or folding proteins. I gave some examples of datasets in many everyday contexts which say one thing by themselves but say something very different when you think more carefully about the data and put it in context, both historic examples and very recent ones from the news.

I definitely enjoyed giving the talk (it had been buzzing in my mind for over a month). I think they enjoyed it, too, although I was slightly worried some topics were too adult - I was also talking about computers doing epidemiology, emergency medicine, and nuclear weapons maintenance, the point being they're safer to do inside the computer than outside.

Curiously I had understood they were all girls so I had looked up quotations from women astronauts etc. What turns up on the day? Boys, every last one o'them.

I was invited by Lambeth City Learning Centre (www.lambethclc.org.uk) to give a talk about "computing and science" to schoolchildren. They were 14 year olds, it's the first time I've given talks to children.

My first main point was that increases in computing performance enables researchers in every single field of research to do more, particularly in a world where now everybody needs to collaborate. I used the grids as an example, talking about GridPP and NGS. Only one of them had heard of LHC (or would admit to it).

I used games as an analogy - they all knew those. Games visualise a world which lives inside the machine, they "collaborate" by playing with others.

The other main point I made was that they need to think. The computer is stupid, it doesn't know that it's flying a concorde, calculating the length of a bridge, or folding proteins. I gave some examples of datasets in many everyday contexts which say one thing by themselves but say something very different when you think more carefully about the data and put it in context, both historic examples and very recent ones from the news.

I definitely enjoyed giving the talk (it had been buzzing in my mind for over a month). I think they enjoyed it, too, although I was slightly worried some topics were too adult - I was also talking about computers doing epidemiology, emergency medicine, and nuclear weapons maintenance, the point being they're safer to do inside the computer than outside.

Curiously I had understood they were all girls so I had looked up quotations from women astronauts etc. What turns up on the day? Boys, every last one o'them.

Thursday 9 October 2008

September CPU efficiencies

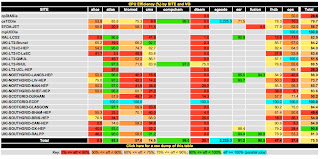

APEL data provides a view of CPU efficiencies (CPU time/Wall time) across UKI sites. The table here shows the results for September.

Monday 29 September 2008

New IGTF distribution 1.25

What's new? NCHC is back in (Taiwan), new keys not vulnerable to Debian incident. A number of metadata files were updated.

There is a new group, the IGTF RAT, Risk Assessment Team, which covers the whole world, timezone-wise (or close enough). The idea is when a vulnerability is announced via the IGTF, the RAT assesses the risk and alerts the CAs concerned. The idea, of course, comes from the Debian incident where "most" CAs had responded within a week but "most" is not good enough. So the RAT ran a test, alerting each CA to see how long it took to respond via the address advertised in the .info file. Of all currently accredited CAs, about 75% responded within 24 hours (including the UK!), but some took over a week and a second prodding. For many CAs it highlighted communications problems in their infrastructure as mail was being flagged as spam etc; these should be addressed in this release.

From a day-to-day operations point of view, you may be relieved to find the new FNAL certificate updated in the "experimental" folder, since the old one was due to expire soon.

https://dist.eugridpma.info/distribution/igtf/current/

There is a new group, the IGTF RAT, Risk Assessment Team, which covers the whole world, timezone-wise (or close enough). The idea is when a vulnerability is announced via the IGTF, the RAT assesses the risk and alerts the CAs concerned. The idea, of course, comes from the Debian incident where "most" CAs had responded within a week but "most" is not good enough. So the RAT ran a test, alerting each CA to see how long it took to respond via the address advertised in the .info file. Of all currently accredited CAs, about 75% responded within 24 hours (including the UK!), but some took over a week and a second prodding. For many CAs it highlighted communications problems in their infrastructure as mail was being flagged as spam etc; these should be addressed in this release.

From a day-to-day operations point of view, you may be relieved to find the new FNAL certificate updated in the "experimental" folder, since the old one was due to expire soon.

https://dist.eugridpma.info/distribution/igtf/current/

Tuesday 9 September 2008

All green

Thursday 21 August 2008

Play it again SAM

LHCb have recently made a change to their software install SAM tests - as noted at todays WLCG Daily meeting:

However for once Glasgow knew about these failures at 23:45 last night - The WLCG monitoring plugin did exactly what it should, picked up the SAM failure on its poll of the test results and notified glasgow by email.

The latest (SVN) version of the ncg.pl script has support for multisite configuration by the inclusion of

Some LHCB specific SAM CE tests were systematically failing everywhere because of a bug in one of the modules for installing software. These tests have been temporary set "not critical" until a fix will be in place and fully tested.

However for once Glasgow knew about these failures at 23:45 last night - The WLCG monitoring plugin did exactly what it should, picked up the SAM failure on its poll of the test results and notified glasgow by email.

The latest (SVN) version of the ncg.pl script has support for multisite configuration by the inclusion of

<NCG::SiteSet>sections in the config. Not tested it yet - thats on the rest of the weeks TODO list

<File>

DB_FILE=$MAIN_DB_FILE

</File>

</NCG::SiteSet>

Wednesday 2 July 2008

A view on Steve's new network tests

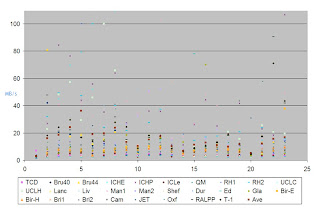

Earlier today Steve released results from his new network tests (see his blog entry). As a first step to looking at the data the plot above shows the scatter of from site b SE - to site a CE results. For this view rates are capped at about 100 Mb/s (which impacts the UCLH-UCLC 164.5; RH1-RH1 764.9 and RH2-RH2 243.1 Mb/s results). As Steve mentions it is too early to draw conclusions but there are some definite patterns present in this data. The x-axis is the SE used in the order given in Steve's table (thus 1 is TCD... 5 is RH1 and so on).

Friday 20 June 2008

CCRC post-mortem workshop - talk summaries

WLCG CCRC post-mortem workshop – summary

Agenda: http://indico.cern.ch/conferenceTimeTable.py?confId=23563

First part of workshop was on storage. Generally observed that there was insufficient time for middleware testing.

CASTOR: Saw SRM ‘lock-ups’ – CASTOR network related. The move to SL4 is becoming urgent. There needs to be more pre-release testing. They want to prioritise tape recalls. [UK: RAL tape servers mentioned as “flaky”].

STORM – monitoring is an issue. A better admin interface is needed together with FAQs and improved use of logs.

dCache (based on SARA) – spotted many problems in CCRC (e.g. lack of movers). Wrong information provided by SRM. Crashing daemons were an issue. There were questions about the development roadmap. Smaller changes advertised but more implemented. For tape reading there was not enough testing.

DPM (GRIF): Balance with file system not right – some full areas while others not well used. Balancing is needed. Main uncertainty seems to be the xrootd plugin (not tested yet). Advanced monitoring tools would be useful.

Database (MG) – ATLAS 3D milestones met. Burst loads are seen when data reprocessing jobs start on an empty cluster.

Middleware – no special treatment in this area for CCRC. Numerous fixes published – CE; dCache; DPM; FTS/M; VDT. Middleware baseline was defined early on. Main question is “are communications clear?” EMT – deals with short-term plans and TMB the medium-term plans. Aside: Are people aware of the Application Area Repository which gives early access to new clients?

Monitoring:

- no easy way to show external people status of experiment. GridView used for common transfer plots. Sites still bit disoriented understanding their performance.

- ATLAS – dashboard for DDM. Problems generally raised in GGUS and elog. Still difficult to interpret errors and correlate information to diagnose (is it a site or experiment problem). Downtime on dashboard would be useful together with a heartbeat signal (to check sites in periods low activity).

- CMS used PHEDEX and GridView. SiteStatusBoard was most used. Commissioning of sites worked well. Better diagnosis regarding failure reason from the applications are required.

- LHCb – data transfer and monitoring via DIRAC. Issue with UK certificates picked up in talk.

- GridView is limited to T1-T1 traffic. FTM has to be installed at all sites for ths monitoring but it was not a clear requirement.

- Core monitoring issue now relates to multiple information sources – which is the top view. The main site view should be via local fabric monitoring – this is progressing.

- Better views of the success of experiment workflows is needed. Getting better for critical services.

Support

ALICE positive about ALARM feedback.

CMS: Use HyperNews and mail to reach sites. Sites need to be more pedantic in notifying ALL interventions on critical services (regardless of criticality).

LHCb: Speed of resolution still too dependent on local contacts. Problem still often seen first by VO.

ATLAS – use a ticket hierarchy. Shifters follow up with GGUS and in daily meetings. They note it is hard to follow site broadcast messages.

ROCs: Few responses before the meeting. There was a question about where (EGEE) ROCs sit in the WLCG process.

GGUS:Working on alarms mechanisms for LHC VOs – these feed into T1 alarm mail lists. Team tickets are comig – editable by whole shift team. Tickets will soon have case types: incident, change request, documentation. An MoU area (to define agreements at risk with problem) and support for LHCOPN also being implemented.

Tier-2s: Initial tuning took time – new sites inclusion, setting up FTS channels etc.

Most T2 peak rates > nominal required. T2 traffic tended to be bursty. One issue is how to interpret no or (only) short lived jobs at a site. Much time is used to establish that everything is normal! Mailing lists contain too much information – often exchanges are site specific. Direct VO contacts were found to be helpful.

What should T2s check on the VO monitoring pages? What are the VO activities.

Tier-1: (BNL-ATLAS): Went well. Found problem with backup link but resolved quickly..

FNAL(CMS): Jobs seen to be faster at CERN (this was IO not CPU bound – SRM stress authn/authz). Site fully utilized. Used file families for tape. Request instructions on who to inform about irregularities. There is no clear recommended phedex FTS download agent configuration.

PIC: Site updates were scheduled for after CCRC. The number of queued jobs sometimes led to excessive load on CE. Wish to set limit with GlueCEPolicyMaxWaitingJobs. Need to automate directory creation for file families. Import rates ok. Issue with some exports – FTS settings? Also concern with conditions DB file getting “hot” ~400 jobs try to access same file and pool hung as 1Gb internal link saturated. CMS skim jobs filled farm and this led WN-SE network switches (designed 1-2 MB/s/job) to be saturated and become a bottleneck – but farm stayed running. The CMS read ahead buffer is too large.

IN2P3: Main issue was LHCb data access problems with dCache-managed data via gsidcap. Regional LFC for ATLAS failed.

SARA: Quality control of SRM releases is a problem

RAL: CE overload after new WNs installed. Poor CMS efficiency seen - production work must pre-stage. Normal users capped to give priority to production work. CASTOR simultaneous deletes problem. CASTOR gridftp restarts led to some ATLAS transfer failures. Target data rates are no longer clear. Need a document with targets agreed. Need monitoring which shows site its experiment contribution vs expectation.

T0: Powercut was “good” for debugging the alert procedure (how to check etc) and find GC problem. SRM reliability remains an issue. More service decoupling planned. SRM DBs will move to Oracle. More tape load was expected in CCRC. Tuning bulk transfers still needed. Many repeat tape mounts seen per VO. Power cut planning – run a test every 3 months. Changed to publish CPUs in place of cores to get more work. Publish cores as physical CPUs. Pilot SLC4 gLite3.1 WMS used by CMS.

ATLAS: Fake load generator at T0. Double registration problem (DDM-LFC). NDGF limited to 1 buffer in front of 1 tape drive (limits rates). T1-T1 RAL issue seen to INFN and IN2P3 (now resolved). Slight issue with FZK. “Slightly low rate – not aggressive in FTS setting”. Global tuning of FTS parameters needed. Want 0 internal retries in FTS at T1s (action). Would like logfiles exposed. UK CA rpm not updated on disk servers caused problem for RAL. 1 transfer error on full test. T1-T1 impacted by power cut. T1->T2s ok and higher rates possible. CASTOR problems seen: too many threads busy; nameserver overload; SRM fails to contact Oracle backend. For dCache saw PNFS overload. Problematic upgrade. StoRM need to focus on interaction between gridftp and GPFS.

Sites need to instrument some monitoring of space token existence (action). CNAF used 2 FTS servers (1 tape and 1 disk) – painful for ATLAS. Most problems in CCRC concerned storage. Power cuts highlighted some procedures are missing in ATLAS.

LHCb: No checksum probs seen in T0->T1 transfers. Issue with UK certificates hit RAL transfers. Submitted jobs done/created for RAL = 74%. (avg. 67%). For reconstruction a large number of jobs failed to upload rDST to local SE (file registering procedures in LFC). RAL success/created = 68%. For RAL the application was crashing with seg fault in RFIO. [Is this now understood?]. The fallback was to copy i/p data to WN via gridFTP. Stripping: highlighted major issues with LHCb book keeping. Data access issues mainly for dCache.

CMS: Many issues with t1transfer pool in real life. CAF – no bottlenecks. T0->T1 ok. Like others impacted by UK CA certificate problem. T1->T1: RAL ok. [note: graph seems more spread than other T1s]. T1->T2 aggregates for own region similar/lower than others (#user jobs limited).For UK, no single dataset found so 22 small datasets used. [Slide 66: RHUL no direct CMS support – some timeout errors. RALPP – lacked space for phase 2. QMUL not tested. Britsol – host cert expired in round two so no additional transfers. Estonia – rate was good! General: LAN overload in some dCache sites: ~30MB/s/job – this is thought to relate to read_ahead being on by default – issue for erratic reads. Prod+Anal at T2s: UK phase 1 ok but number of jobs small. Phase 2 (chaotic job submission) and Phase 3 (local scope DBS) results not clear and work continues.

ALICE: Unable to load talk

Critical services: ATLAS: Use service map http://servicemap.cern.ch/ccrc08/servicemap.html. ATLAS dashboard is the main information source. Hit by kernel upgrades at CERN. Quattorized installation etc. works well.

ALICE: Highest rank services are VO-boxes and CASTOR-xrootd. Use http://alimonitor.cern.ch

CMS: Has a critical services Task Force. CMS critical services (with ranking): https://twiki.cern.ch/twiki/bin/view/CMS/SWIntCMSServices. Collaboration differentiates services (to run) and tasks (to deliver).

LHCb: List is here: https://twiki.cern.ch/twiki/pub/LHCb/CCRC08/LHCb_critical_Services2.pdf

Proposed next workshop – 13-14 Nov. Other meetings to note EGEE’08 and pre-CHEP workshop.

Agenda: http://indico.cern.ch/conferenceTimeTable.py?confId=23563

First part of workshop was on storage. Generally observed that there was insufficient time for middleware testing.

CASTOR: Saw SRM ‘lock-ups’ – CASTOR network related. The move to SL4 is becoming urgent. There needs to be more pre-release testing. They want to prioritise tape recalls. [UK: RAL tape servers mentioned as “flaky”].

STORM – monitoring is an issue. A better admin interface is needed together with FAQs and improved use of logs.

dCache (based on SARA) – spotted many problems in CCRC (e.g. lack of movers). Wrong information provided by SRM. Crashing daemons were an issue. There were questions about the development roadmap. Smaller changes advertised but more implemented. For tape reading there was not enough testing.

DPM (GRIF): Balance with file system not right – some full areas while others not well used. Balancing is needed. Main uncertainty seems to be the xrootd plugin (not tested yet). Advanced monitoring tools would be useful.

Database (MG) – ATLAS 3D milestones met. Burst loads are seen when data reprocessing jobs start on an empty cluster.

Middleware – no special treatment in this area for CCRC. Numerous fixes published – CE; dCache; DPM; FTS/M; VDT. Middleware baseline was defined early on. Main question is “are communications clear?” EMT – deals with short-term plans and TMB the medium-term plans. Aside: Are people aware of the Application Area Repository which gives early access to new clients?

Monitoring:

- no easy way to show external people status of experiment. GridView used for common transfer plots. Sites still bit disoriented understanding their performance.

- ATLAS – dashboard for DDM. Problems generally raised in GGUS and elog. Still difficult to interpret errors and correlate information to diagnose (is it a site or experiment problem). Downtime on dashboard would be useful together with a heartbeat signal (to check sites in periods low activity).

- CMS used PHEDEX and GridView. SiteStatusBoard was most used. Commissioning of sites worked well. Better diagnosis regarding failure reason from the applications are required.

- LHCb – data transfer and monitoring via DIRAC. Issue with UK certificates picked up in talk.

- GridView is limited to T1-T1 traffic. FTM has to be installed at all sites for ths monitoring but it was not a clear requirement.

- Core monitoring issue now relates to multiple information sources – which is the top view. The main site view should be via local fabric monitoring – this is progressing.

- Better views of the success of experiment workflows is needed. Getting better for critical services.

Support

ALICE positive about ALARM feedback.

CMS: Use HyperNews and mail to reach sites. Sites need to be more pedantic in notifying ALL interventions on critical services (regardless of criticality).

LHCb: Speed of resolution still too dependent on local contacts. Problem still often seen first by VO.

ATLAS – use a ticket hierarchy. Shifters follow up with GGUS and in daily meetings. They note it is hard to follow site broadcast messages.

ROCs: Few responses before the meeting. There was a question about where (EGEE) ROCs sit in the WLCG process.

GGUS:Working on alarms mechanisms for LHC VOs – these feed into T1 alarm mail lists. Team tickets are comig – editable by whole shift team. Tickets will soon have case types: incident, change request, documentation. An MoU area (to define agreements at risk with problem) and support for LHCOPN also being implemented.

Tier-2s: Initial tuning took time – new sites inclusion, setting up FTS channels etc.

Most T2 peak rates > nominal required. T2 traffic tended to be bursty. One issue is how to interpret no or (only) short lived jobs at a site. Much time is used to establish that everything is normal! Mailing lists contain too much information – often exchanges are site specific. Direct VO contacts were found to be helpful.

What should T2s check on the VO monitoring pages? What are the VO activities.

Tier-1: (BNL-ATLAS): Went well. Found problem with backup link but resolved quickly..

FNAL(CMS): Jobs seen to be faster at CERN (this was IO not CPU bound – SRM stress authn/authz). Site fully utilized. Used file families for tape. Request instructions on who to inform about irregularities. There is no clear recommended phedex FTS download agent configuration.

PIC: Site updates were scheduled for after CCRC. The number of queued jobs sometimes led to excessive load on CE. Wish to set limit with GlueCEPolicyMaxWaitingJobs. Need to automate directory creation for file families. Import rates ok. Issue with some exports – FTS settings? Also concern with conditions DB file getting “hot” ~400 jobs try to access same file and pool hung as 1Gb internal link saturated. CMS skim jobs filled farm and this led WN-SE network switches (designed 1-2 MB/s/job) to be saturated and become a bottleneck – but farm stayed running. The CMS read ahead buffer is too large.

IN2P3: Main issue was LHCb data access problems with dCache-managed data via gsidcap. Regional LFC for ATLAS failed.

SARA: Quality control of SRM releases is a problem

RAL: CE overload after new WNs installed. Poor CMS efficiency seen - production work must pre-stage. Normal users capped to give priority to production work. CASTOR simultaneous deletes problem. CASTOR gridftp restarts led to some ATLAS transfer failures. Target data rates are no longer clear. Need a document with targets agreed. Need monitoring which shows site its experiment contribution vs expectation.

T0: Powercut was “good” for debugging the alert procedure (how to check etc) and find GC problem. SRM reliability remains an issue. More service decoupling planned. SRM DBs will move to Oracle. More tape load was expected in CCRC. Tuning bulk transfers still needed. Many repeat tape mounts seen per VO. Power cut planning – run a test every 3 months. Changed to publish CPUs in place of cores to get more work. Publish cores as physical CPUs. Pilot SLC4 gLite3.1 WMS used by CMS.

ATLAS: Fake load generator at T0. Double registration problem (DDM-LFC). NDGF limited to 1 buffer in front of 1 tape drive (limits rates). T1-T1 RAL issue seen to INFN and IN2P3 (now resolved). Slight issue with FZK. “Slightly low rate – not aggressive in FTS setting”. Global tuning of FTS parameters needed. Want 0 internal retries in FTS at T1s (action). Would like logfiles exposed. UK CA rpm not updated on disk servers caused problem for RAL. 1 transfer error on full test. T1-T1 impacted by power cut. T1->T2s ok and higher rates possible. CASTOR problems seen: too many threads busy; nameserver overload; SRM fails to contact Oracle backend. For dCache saw PNFS overload. Problematic upgrade. StoRM need to focus on interaction between gridftp and GPFS.

Sites need to instrument some monitoring of space token existence (action). CNAF used 2 FTS servers (1 tape and 1 disk) – painful for ATLAS. Most problems in CCRC concerned storage. Power cuts highlighted some procedures are missing in ATLAS.

LHCb: No checksum probs seen in T0->T1 transfers. Issue with UK certificates hit RAL transfers. Submitted jobs done/created for RAL = 74%. (avg. 67%). For reconstruction a large number of jobs failed to upload rDST to local SE (file registering procedures in LFC). RAL success/created = 68%. For RAL the application was crashing with seg fault in RFIO. [Is this now understood?]. The fallback was to copy i/p data to WN via gridFTP. Stripping: highlighted major issues with LHCb book keeping. Data access issues mainly for dCache.

CMS: Many issues with t1transfer pool in real life. CAF – no bottlenecks. T0->T1 ok. Like others impacted by UK CA certificate problem. T1->T1: RAL ok. [note: graph seems more spread than other T1s]. T1->T2 aggregates for own region similar/lower than others (#user jobs limited).For UK, no single dataset found so 22 small datasets used. [Slide 66: RHUL no direct CMS support – some timeout errors. RALPP – lacked space for phase 2. QMUL not tested. Britsol – host cert expired in round two so no additional transfers. Estonia – rate was good! General: LAN overload in some dCache sites: ~30MB/s/job – this is thought to relate to read_ahead being on by default – issue for erratic reads. Prod+Anal at T2s: UK phase 1 ok but number of jobs small. Phase 2 (chaotic job submission) and Phase 3 (local scope DBS) results not clear and work continues.

ALICE: Unable to load talk

Critical services: ATLAS: Use service map http://servicemap.cern.ch/ccrc08/servicemap.html. ATLAS dashboard is the main information source. Hit by kernel upgrades at CERN. Quattorized installation etc. works well.

ALICE: Highest rank services are VO-boxes and CASTOR-xrootd. Use http://alimonitor.cern.ch

CMS: Has a critical services Task Force. CMS critical services (with ranking): https://twiki.cern.ch/twiki/bin/view/CMS/SWIntCMSServices. Collaboration differentiates services (to run) and tasks (to deliver).

LHCb: List is here: https://twiki.cern.ch/twiki/pub/LHCb/CCRC08/LHCb_critical_Services2.pdf

Proposed next workshop – 13-14 Nov. Other meetings to note EGEE’08 and pre-CHEP workshop.

Thursday 12 June 2008

CCRC Post Mortem workshop (day1 - PM)

Monitoring

Talk about how everything feeds to gridview, but conveniently skips the lack of detail you normally find in gridview. Mentioned ongoing effort for sites to get an integrated view of VO activity ath their site. Too many pages to look at.

Once again 'nice' names for sites were discussed - we want the 'real' name that a site is known as. Network monitoring tools were discussed (well, lack of them!) as was the issue when a service is 'up' but not performing well.

Experiments quickly see when a site fails but sites don't know this. Nice to have a mashup of the type of jobs vo's are running at site (production / user) etc

Support

Bit Rot on Wiki Pages - they have to be kept up to date. (CMS)

Basic functionality tests (can a job run / file get copied) SHOULD be sent directly to sites (ATLAS)

Discussions - Dowtime announcement only goes to VO managers by default - users not informed. RSS of downtimes can be parsed. How do sites do updates - if they announce SE downtime jobs still run on CE but fail as no storage. Do they take entire site down?

perhaps flag the CE as not in production?

"view from ROCs" - No input from the UKI-ROC - drowned out in email?

Procedures to follow when you get big (power) outages.

[as an aside - the power cut at CERN - scotgrid-glasgow noticed that the proxy servers went down within the hour due to nagios email alert sitting in inbox]. Watch out for power cats :-). Perhaps we need an SMS to EGEE-Broadcast gateway if sending broadcast is too hard for operator staff.

GGUS updates - Alarm tickets in progress (via email or portal). Being extended for the LHCOPN to use to track incidents. A Vigorous discussion ensured about the number / role of people who were allowed to declare an experiment down (even if no alarms yet seen) to call out all the relevant people.

Tier2 report - Had phone call and missed part of the talk.

Tier1/PIC - had an unfesably large cache infront of T1D0 due to T1D1 tests (~100TB) CMS Skimming jobs saturated SE switches but service stayed up.

Need for QA/Testing in middleware. Need to do something with hot files - causes high load on afs cell.

RAL - pretty much as expected. Few issues - Low CMS efficency, User tape recalls, OPN routing. Overall expected transfer rates not known in advance. Yet another req for a site dashboard.

Should we force the experiments to try and allocate a week to do a combined reconstruction session to check that we're ready.

right - thats it for now. Off to generate metrics for the curry....

CCRC Post Mortem workshop (day1 - PM)

More from the CCRC post mortem meeting

1) No change from normal release procedures. FTM didn't seem to be picked up as a requirement by Tier-1s (however RAL had their own already in place). Problem was that there wasn't a definirive software list, just the changes needed. CREAM is coming (slowly) and SL5 WN perhaps by Sept 08

SL5 plans - ATLAS would like to get software ready for winter break (build on 4 and 5)

CMS - Switch to SL5 wintr shutdown. Like to have software before that for testing.

CERN - lxbatch / lxplus test cluster will be avail from sept onwards (assuming hardware)

Middleware

1) No change from normal release procedures. FTM didn't seem to be picked up as a requirement by Tier-1s (however RAL had their own already in place). Problem was that there wasn't a definirive software list, just the changes needed. CREAM is coming (slowly) and SL5 WN perhaps by Sept 08

SL5 plans - ATLAS would like to get software ready for winter break (build on 4 and 5)

CMS - Switch to SL5 wintr shutdown. Like to have software before that for testing.

CERN - lxbatch / lxplus test cluster will be avail from sept onwards (assuming hardware)

CCRC Post Mortem workshop (day1 - AM)

Some crib notes from the CCRC postmortem workshop - These should be read together with the Agenda. Where I've commented its parts that I consider particularly useful.

1) Castor / Storm

GPFS vs GridFTP block sizes - GPFS 1MB, SL3 GridFTP 64K, SL4 GridFTP 256K

Reduced gridftp timeouts from 3600s to 3000s

CMS had problems with GPFS s/w area - latency issue [same as ECDF?] - partly due to switch fault but they migrated s/w are to another filesystem

RAL - General slowdown needed DB intervention. LHCb RFIO failures. Need to prioritise tape mounts - Prodn >>> users.

2) CASTOR development - Better testing planned and in progress. Also logging improved.

3) dCache (SARA) 20 gridftp movers on thumpers cf 6 on linux boxes. gsidcap only on SRM node itself. Good tape metrics gathered and published on their wiki. HSM pools filled up due to orphanned files- demoved from pnfs namespace but not deleted. Caused by failed FTS transfers (timeout now increased)

... coffee time :-)

4) DPM (GRIF/LAL) - Mostly OK -- some polishing required (highlighted dpm-drain) and Greigs monitoring work.

1) 'make em resilient' - have managed 99.96% availability (3.5h downtime/yr)

They have upgraded to new hardware on the oracle cluster @ Tier0

Lessons learnt from the powercut - make sure your network stuff is UPSd esp if you have machines you expect to be up...

2) ATLAS - rely on DB for reprocessing capable of ~1k concurrent sessions. Some nice replication tricks to (and from) remote sites such as the muon calibration centres

3) SRM issues - went more or less according to plan. Understood and corrected issues found. Otherwise - seemed to be within 'normal' load range that they were used to.

Storage

1) Castor / Storm

GPFS vs GridFTP block sizes - GPFS 1MB, SL3 GridFTP 64K, SL4 GridFTP 256K

Reduced gridftp timeouts from 3600s to 3000s

CMS had problems with GPFS s/w area - latency issue [same as ECDF?] - partly due to switch fault but they migrated s/w are to another filesystem

RAL - General slowdown needed DB intervention. LHCb RFIO failures. Need to prioritise tape mounts - Prodn >>> users.

2) CASTOR development - Better testing planned and in progress. Also logging improved.

3) dCache (SARA) 20 gridftp movers on thumpers cf 6 on linux boxes. gsidcap only on SRM node itself. Good tape metrics gathered and published on their wiki. HSM pools filled up due to orphanned files- demoved from pnfs namespace but not deleted. Caused by failed FTS transfers (timeout now increased)

... coffee time :-)

4) DPM (GRIF/LAL) - Mostly OK -- some polishing required (highlighted dpm-drain) and Greigs monitoring work.

Databases

1) 'make em resilient' - have managed 99.96% availability (3.5h downtime/yr)

They have upgraded to new hardware on the oracle cluster @ Tier0

Lessons learnt from the powercut - make sure your network stuff is UPSd esp if you have machines you expect to be up...

2) ATLAS - rely on DB for reprocessing capable of ~1k concurrent sessions. Some nice replication tricks to (and from) remote sites such as the muon calibration centres

3) SRM issues - went more or less according to plan. Understood and corrected issues found. Otherwise - seemed to be within 'normal' load range that they were used to.

Wednesday 7 May 2008

Oracle sick at RAL - GOCDB affected

As has been broadcast several times, RAL suffered a power glitch which has had a bad effect on some services. One of those still outstanding is the GOCDB. See https://cic.gridops.org/index.php?section=rc&page=broadcastretrieval&step=2&typeb=C&idbroadcast=32223 for details.

Posted here to raise awareness as people may use the RSS feed..

Posted here to raise awareness as people may use the RSS feed..

Monday 28 April 2008

Impact of a SPOF

Last week connectivity to the RAL Tier-1 was affected for many hours due to a large number of firewall connections being left open. The ops SAM tests quickly showed how the Tier-1 can act as a Single Point of Failure with the current setup. The evidence is in this gridpp grid status snapshot in the 24hrs column. Note that Dublin and ScotGrid sites were unaffected as they now run their own RB/WMS and top-level BDII.

Thursday 14 February 2008

Tier1 Joins the Blogosphere

Nice to see the RAL Tier-1 site has a blog over at http://www.gridpp.rl.ac.uk/blog/. I've Duly added it to the GridPP Planet site and picked up this rather useful snippet about the Areca RAID Cards.

Friday 8 February 2008

CERN VOMS woes

People's v-p-inits stopped working - from the CERN VOMS. Turns out the UK CA certs had been taken out, in the mistaken belief they had been revoked... Without consulting me, of course. Apart from a flurry of email, I called Remi this morning asking him to put them back and he said he'd do that today.

It was the root cert that was "suspected compromised" (but not "compromised", which is why we're ok for now - a resourceful attacker would need O(years) to do something with an encrypted key). And the e-Science CA itself was fine.

Eventually we may need to do something more to get people off the old CA chain. Certain relying parties (in a certain large country) have expressed concerns, which, even if there is no compelling technical reason to be concerned, may need addressing. We can re-sign old certificates under the new chain (so you keep your private key - we can do that), or just ask people to renew a bit earlier. But if and when any such things should happen you'd know about it because I would tell you!

We also talked about the rollover registration problem, I'll follow up on that.

It was the root cert that was "suspected compromised" (but not "compromised", which is why we're ok for now - a resourceful attacker would need O(years) to do something with an encrypted key). And the e-Science CA itself was fine.

Eventually we may need to do something more to get people off the old CA chain. Certain relying parties (in a certain large country) have expressed concerns, which, even if there is no compelling technical reason to be concerned, may need addressing. We can re-sign old certificates under the new chain (so you keep your private key - we can do that), or just ask people to renew a bit earlier. But if and when any such things should happen you'd know about it because I would tell you!

We also talked about the rollover registration problem, I'll follow up on that.

Wednesday 6 February 2008

evo on osX

GridPP uses the evo software for most of its videoconference meetings. It normally works pretty well (espcially when compared to VRVS / Access Grid) and the audio on my mac laptop is suprisingly good without the need of a headset.

However, todays java web start did an upgrade to 1.0.8 and broke the video client - it just refused to start.

Problem is caused by an incorrect shell script test in ~/.Koala/plugins/ViEVO/ViEVO

and all works fine. RT Ticket [vrvs.org #2615] has been raised with the developers.

However, todays java web start did an upgrade to 1.0.8 and broke the video client - it just refused to start.

Problem is caused by an incorrect shell script test in ~/.Koala/plugins/ViEVO/ViEVO

if [ "$OSTYPE" -ne "darwin9.0" ]; then

should be

if [ "$OSTYPE" != "darwin9.0" ]; then

and all works fine. RT Ticket [vrvs.org #2615] has been raised with the developers.

Monday 4 February 2008

Out in the big world

Thought I'd start blogging some CA stuff because a lot of things happen which you may not otherwise hear about (or indeed care about, but at least you have the option). CAs are kind of operational so I thought it fits OK here. It ain't storage, that I know (except see below).

Starting in the big world, we now have Ukraine and Morocco on board. I was a reviewer for both. Much of my review work (specifically travel to the meetings) is funded by GridPP, so we can count this as yet another GridPP contribution to the global grid, I'd have thunk. If that helps.

Anyway, a review often takes many iterations (I counted nine with someone, Mexico I think, and we're still not quite done), and the whole process from being a glint in someone's eye to being a fully accredited CA can take years. There is usually two reviewers, occasionally three, all of whom need to say "aye". There is some grumbling - particularly in a certain large country with more than one CA - that it takes so long, and they would like to cut corners. I am working on some IGTF stuff which should speed up the process without the corner cutting but it's a bit experimental. Other people from the afore-not-mentioned country have had related ideas and this will be tied in.

Latvia have applied for membership (I am also a reviewer here); they were previously covered by the Estonian run BalticGrid CA (which incidentally I also reviewed back in '05), but for various reasons now want one for themselves. Lithuania is still in BalticGrid with no plans otherwise.

Among the more politically interesting, we find the area known as FYROM or Macedonia, depending on who you ask, who want to join with a CA under the name of Macedonia which made the Greek jump. Iran is now also interested in joining and have started setting up a CA, unfortunately they find it hard to travel and even videoconferencing with them can be embargoed. I am not reviewing those two though.

Oh, and a tearful goodbye and cheerio to the old CERN CA, headed by Ian Neilson. The CA community expressed its gratitude to the CA which was one of the earliest ones. Since CAs must archive everything for at least three years, Ian is now wondering how to archive about 1TB's worth of email which is mostly spam...

Incidentally, CERN and INFN who both have expired CAs noticed a large number of attempted CRL downloads, which indicates that someone's systems haven't been updated. On CERN's list, at #8, was a UK site which shall remain nameless. It was an NGS site, though.

Belgium is also under new management, so to speak, which looks very promising. Till now they were very quiet and it's better that CAs make (the right kind of) noises.

Meanwhile, folk in Armenia are interested in spreading PKI to other countries in the region, the "Silk Highway". This will be based on OpenCA but is so far only a proposal. More on this if it gets funded.

That's all for now. I think next post will be internal - UK e-Science CA notes.

Oh, and about the rollover problems, I filed a bug report against CERN but I'm not confident it ended up in the right hands. Not just our headache, I counted four other CAs who are currently rolling over, with another nine starting rollover later this year. It is a bug, Grid CAs only guarantee the subject DN. More later (or on dteam).

Starting in the big world, we now have Ukraine and Morocco on board. I was a reviewer for both. Much of my review work (specifically travel to the meetings) is funded by GridPP, so we can count this as yet another GridPP contribution to the global grid, I'd have thunk. If that helps.

Anyway, a review often takes many iterations (I counted nine with someone, Mexico I think, and we're still not quite done), and the whole process from being a glint in someone's eye to being a fully accredited CA can take years. There is usually two reviewers, occasionally three, all of whom need to say "aye". There is some grumbling - particularly in a certain large country with more than one CA - that it takes so long, and they would like to cut corners. I am working on some IGTF stuff which should speed up the process without the corner cutting but it's a bit experimental. Other people from the afore-not-mentioned country have had related ideas and this will be tied in.

Latvia have applied for membership (I am also a reviewer here); they were previously covered by the Estonian run BalticGrid CA (which incidentally I also reviewed back in '05), but for various reasons now want one for themselves. Lithuania is still in BalticGrid with no plans otherwise.

Among the more politically interesting, we find the area known as FYROM or Macedonia, depending on who you ask, who want to join with a CA under the name of Macedonia which made the Greek jump. Iran is now also interested in joining and have started setting up a CA, unfortunately they find it hard to travel and even videoconferencing with them can be embargoed. I am not reviewing those two though.

Oh, and a tearful goodbye and cheerio to the old CERN CA, headed by Ian Neilson. The CA community expressed its gratitude to the CA which was one of the earliest ones. Since CAs must archive everything for at least three years, Ian is now wondering how to archive about 1TB's worth of email which is mostly spam...

Incidentally, CERN and INFN who both have expired CAs noticed a large number of attempted CRL downloads, which indicates that someone's systems haven't been updated. On CERN's list, at #8, was a UK site which shall remain nameless. It was an NGS site, though.

Belgium is also under new management, so to speak, which looks very promising. Till now they were very quiet and it's better that CAs make (the right kind of) noises.

Meanwhile, folk in Armenia are interested in spreading PKI to other countries in the region, the "Silk Highway". This will be based on OpenCA but is so far only a proposal. More on this if it gets funded.

That's all for now. I think next post will be internal - UK e-Science CA notes.

Oh, and about the rollover problems, I filed a bug report against CERN but I'm not confident it ended up in the right hands. Not just our headache, I counted four other CAs who are currently rolling over, with another nine starting rollover later this year. It is a bug, Grid CAs only guarantee the subject DN. More later (or on dteam).

Subscribe to:

Posts (Atom)